Understanding Regularization in Machine Learning

Table of Contents

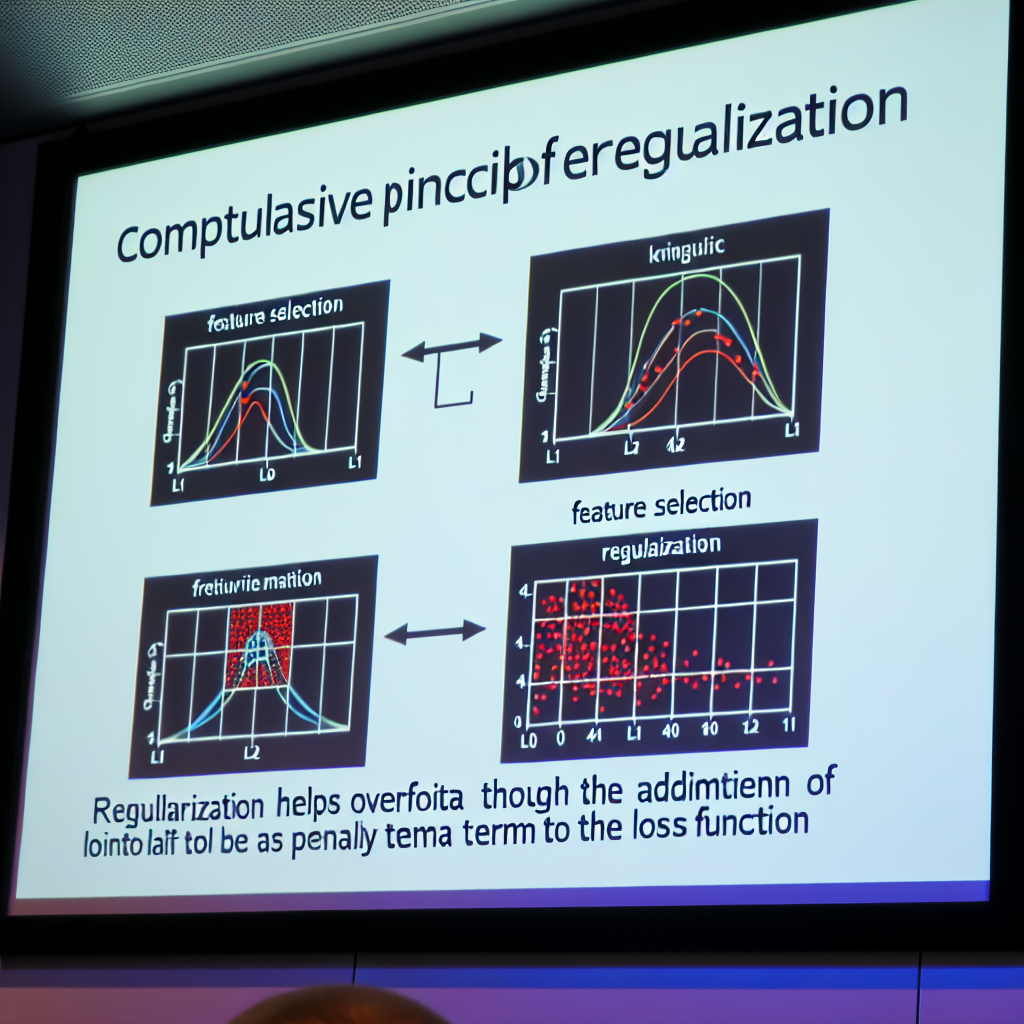

Regularization is a crucial concept in machine learning that helps enhance the performance and generalization of models. It is a technique used to prevent overfitting by adding a penalty term to the loss function, ensuring that the model does not become too complex and fits the training data too closely.

## The Problem of Overfitting

Overfitting occurs when a machine learning model learns the noise and details in the training data to such an extent that it negatively impacts the model’s performance on new, unseen data. This is a common problem, especially when the model is too complex, having too many parameters relative to the number of observations. Overfitted models have high variance and low bias, meaning they perform well on training data but poorly on test data.

## Introduction to Regularization

Regularization introduces additional information to the model to prevent overfitting and improve generalization. By adding a regularization term to the loss function, it constrains or shrinks the coefficient estimates towards zero. This penalty discourages the model from learning a more complex or flexible model, thereby reducing overfitting.

### Types of Regularization

There are various types of regularization techniques, but the most common ones are L1 regularization (Lasso) and L2 regularization (Ridge). Both methods add a penalty term to the loss function, but they differ in how they penalize the coefficients.

#### L1 Regularization

L1 regularization, also known as Lasso (Least Absolute Shrinkage and Selection Operator), adds the absolute value of the magnitude of the coefficient as a penalty term to the loss function. This results in sparse models with few coefficients, effectively performing feature selection by shrinking some coefficients to exactly zero. It is particularly useful when we have a large number of features and we suspect that only a subset of them is useful.

#### L2 Regularization

L2 regularization, also known as Ridge regression, adds the squared value of the magnitude of the coefficient as a penalty term to the loss function. Unlike L1 regularization, it does not lead to sparse models but rather shrinks the coefficients towards zero, ensuring that all features contribute to the prediction. It is useful when all input features are believed to be relevant, but we want to avoid overfitting by controlling the magnitude of the coefficients.

### Elastic Net Regularization

Elastic Net is a regularization technique that combines both L1 and L2 regularization penalties. It is particularly useful when dealing with datasets with highly correlated features. By balancing the L1 and L2 penalties, Elastic Net can provide a more robust model that benefits from the strengths of both Lasso and Ridge regression.

## Practical Applications of Regularization

Regularization is widely used in various machine learning algorithms, including linear regression, logistic regression, and neural networks. In linear regression, regularization helps to avoid multicollinearity and improve model interpretability. In logistic regression, it prevents overfitting and enhances the model’s ability to generalize to new data. In neural networks, regularization techniques such as dropout and weight decay are essential for training deep learning models, ensuring they do not overfit the training data.

## Conclusion

In summary, regularization is a powerful technique in machine learning that helps in improving model performance and generalization by preventing overfitting. By adding a penalty term to the loss function, it constrains the model’s complexity, ensuring it performs well on both training and unseen data. Understanding and applying regularization techniques such as L1, L2, and Elastic Net can significantly enhance the robustness and reliability of machine learning models.