Understanding Decision Trees in Machine Learning

Table of Contents

Introduction

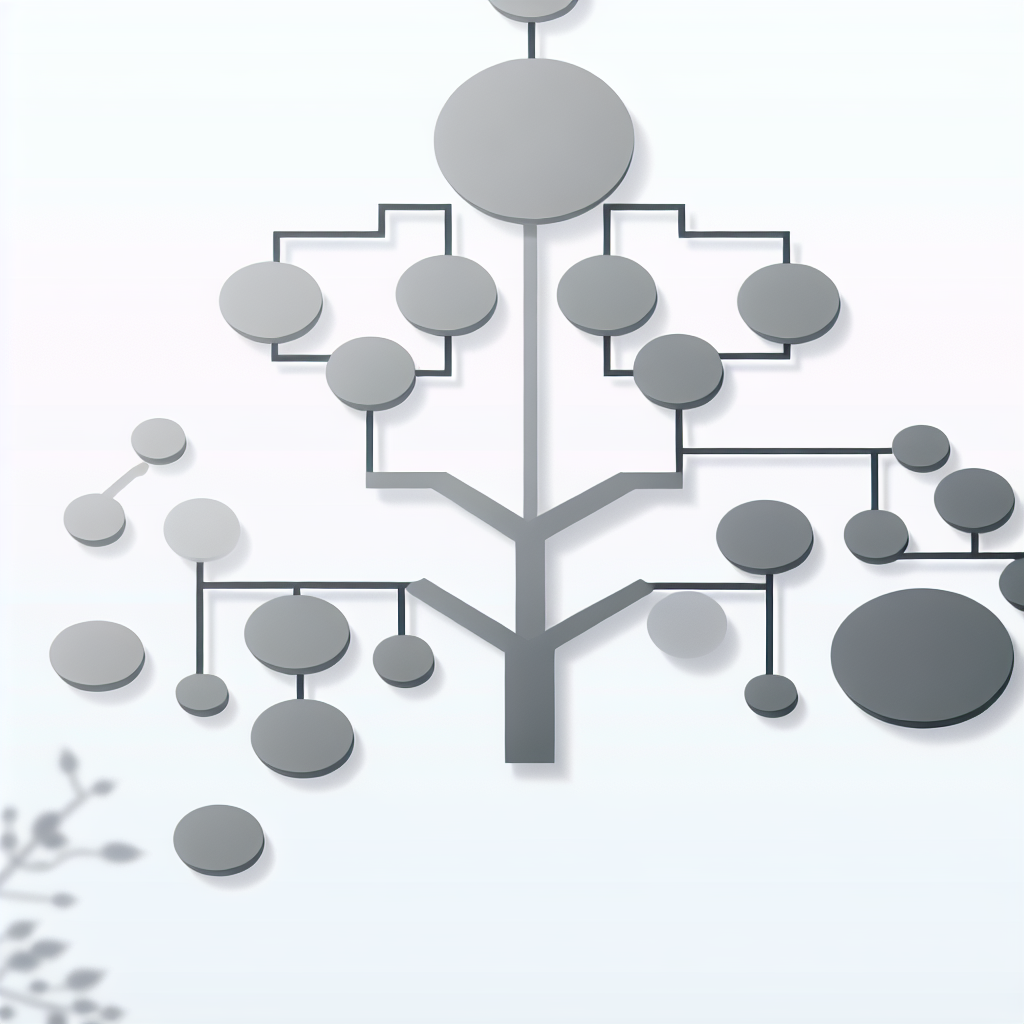

Decision trees are a powerful and intuitive tool used in machine learning for classification and regression tasks. These models mimic human decision-making processes by breaking down complex decisions into simpler, binary choices. Each decision point, known as a node, represents a question about the data, and the branches represent the possible answers, leading to further nodes or final outcomes known as leaves.

Structure of Decision Trees

A decision tree consists of several key components: the root node, internal nodes, branches, and leaf nodes. The root node is the top-most node that represents the entire dataset and the initial question. Internal nodes represent subsequent questions based on the answers to previous questions, while branches connect these nodes and represent the possible answers. Leaf nodes are the terminal nodes that provide the final decision or classification. This hierarchical structure helps in breaking down the decision-making process into manageable steps.

Building a Decision Tree

Constructing a decision tree involves selecting the best attribute to split the data at each node. This selection is typically based on criteria like information gain, Gini impurity, or chi-square tests. Information gain measures the reduction in entropy or disorder after a dataset is split on an attribute. Gini impurity calculates the probability of incorrectly classifying a randomly chosen element if it was randomly labeled according to the distribution of labels in the dataset. These criteria help in finding the most informative attribute that best separates the data.

Advantages of Decision Trees

Decision trees offer several advantages that make them popular in machine learning. Firstly, they are easy to understand and interpret, as they visually represent the decision-making process. This makes them particularly useful for non-experts and stakeholders who need to understand the model’s reasoning. Secondly, they require minimal data preprocessing, as they can handle both numerical and categorical data. Additionally, decision trees are non-parametric, meaning they do not assume any specific distribution for the data, making them versatile for various types of problems.

Challenges and Limitations

Despite their advantages, decision trees also have some limitations. One of the primary challenges is their tendency to overfit the training data, especially when the tree becomes too complex. Overfitting occurs when the model captures noise and anomalies in the training data, leading to poor generalization to new data. To mitigate this, techniques like pruning are used, which involve removing parts of the tree that do not provide significant power in predicting outcomes. Another limitation is their sensitivity to small changes in the data, which can result in entirely different trees being generated.

Applications of Decision Trees

Decision trees are widely used in various domains due to their versatility and interpretability. In finance, they are used for credit scoring and risk assessment. In healthcare, they assist in diagnosing diseases and predicting patient outcomes. Marketing teams use decision trees for customer segmentation and targeting. They are also employed in engineering for quality control and fault detection. The ability to handle diverse datasets and provide clear decision rules makes them valuable across different industries.

Enhancements and Variants

Several enhancements and variants of decision trees have been developed to improve their performance. Random forests, for instance, combine multiple decision trees to form an ensemble model that reduces overfitting and increases accuracy. Gradient boosting machines (GBMs) build trees sequentially, with each tree correcting the errors of the previous ones. These ensemble techniques leverage the strengths of individual trees while mitigating their weaknesses, leading to more robust and accurate models.

Conclusion

In summary, decision trees are a fundamental tool in the arsenal of machine learning algorithms. Their intuitive structure, ease of interpretation, and ability to handle different types of data make them a popular choice for many applications. While they have certain limitations, advancements like pruning and ensemble methods have significantly enhanced their performance. Understanding decision trees and their various aspects provides a strong foundation for exploring more complex machine learning techniques.