Support Vector Machines

Table of Contents

Introduction to Support Vector Machines

Support Vector Machines (SVMs) are a powerful set of supervised learning methods used for classification, regression, and outlier detection. They are particularly well-known for their application in classification problems. SVMs work by finding the hyperplane that best separates different classes in the feature space. This method is highly effective in high-dimensional spaces and is versatile due to its ability to use different kernel functions.

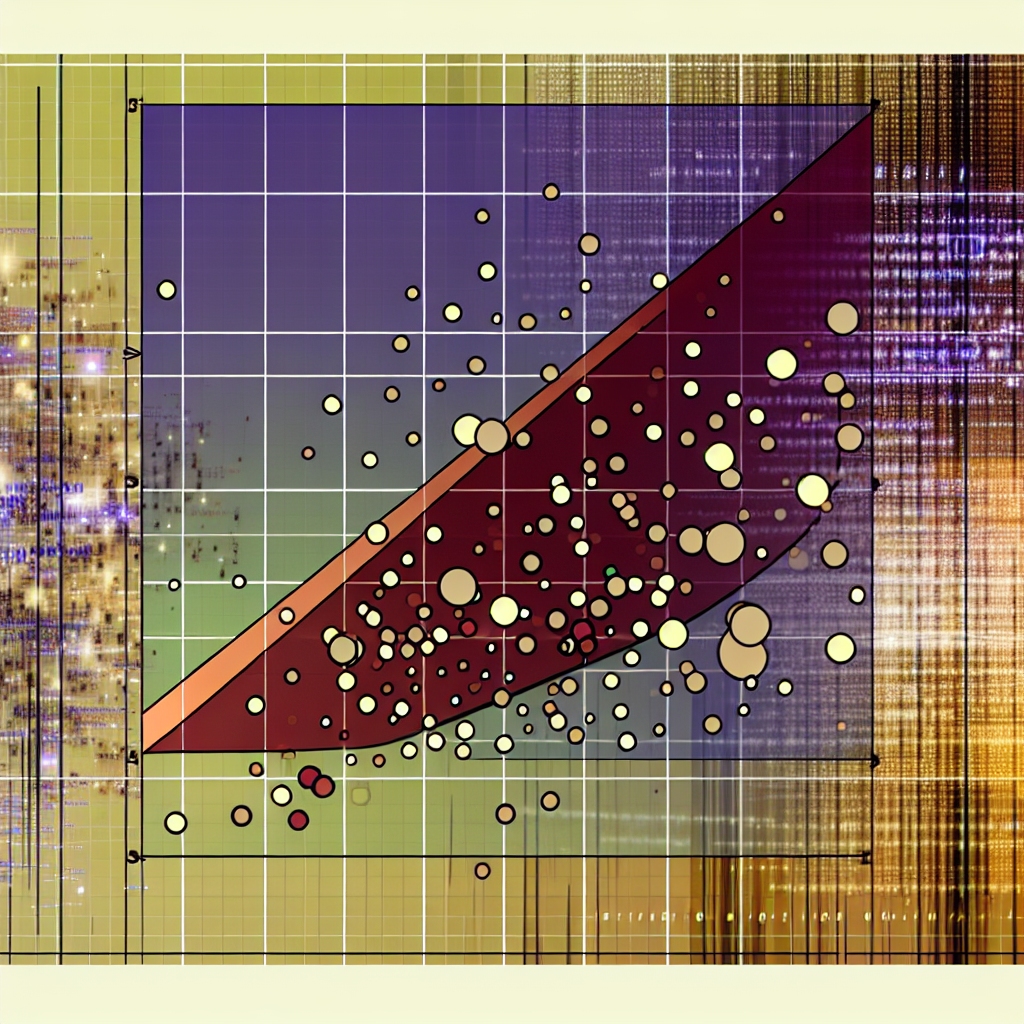

The Working Principle

The main principle behind SVMs is to find a hyperplane that maximizes the margin between the classes. The margin is defined as the distance between the hyperplane and the nearest data point of each class, which are known as support vectors. By maximizing this margin, SVMs aim to improve the model’s ability to generalize to new data. This process involves solving a quadratic optimization problem to find the optimal hyperplane.

Kernel Trick

One of the key features of SVMs is the kernel trick. The kernel trick allows SVMs to operate in a high-dimensional space without explicitly computing the coordinates of the data in that space. Instead, it relies on the computation of the inner products between the images of all pairs of data in the feature space. Commonly used kernels include the linear, polynomial, radial basis function (RBF), and sigmoid kernels. The choice of kernel can significantly impact the performance of the SVM.

Applications of SVMs

SVMs have a wide range of applications in various fields. They are used in text and hypertext categorization, image classification, bioinformatics (e.g., protein classification), and handwriting recognition. Their ability to handle high-dimensional data makes them suitable for complex problems where other algorithms might struggle. In addition, SVMs are robust to overfitting, especially in high-dimensional spaces.

Advantages of SVMs

One of the primary advantages of SVMs is their effectiveness in high-dimensional spaces. They are particularly useful when the number of dimensions exceeds the number of samples. SVMs are also memory efficient since they use a subset of training points in the decision function (support vectors). Furthermore, SVMs are versatile, thanks to the use of different kernel functions, and they have a well-defined theoretical foundation, which provides strong guarantees about their performance.

Limitations of SVMs

Despite their many advantages, SVMs have some limitations. They can be computationally intensive, making them less suitable for very large datasets. The choice of the kernel function and its parameters can significantly affect the model’s performance, requiring careful tuning. Additionally, SVMs do not provide probability estimates directly, which can be a limitation for certain applications. Lastly, SVMs can be sensitive to the choice of regularization parameters and the scale of the data.

Conclusion

Support Vector Machines are a robust and versatile tool in the machine learning toolkit. They excel in classification tasks, particularly in high-dimensional spaces, and offer strong theoretical guarantees about their performance. While they come with some computational challenges and require careful parameter tuning, their ability to find the optimal hyperplane and use the kernel trick makes them a powerful choice for many applications. As machine learning continues to evolve, SVMs remain a critical algorithm for practitioners and researchers alike.