MapReduce: Simplifying Big Data Processing

Table of Contents

Introduction

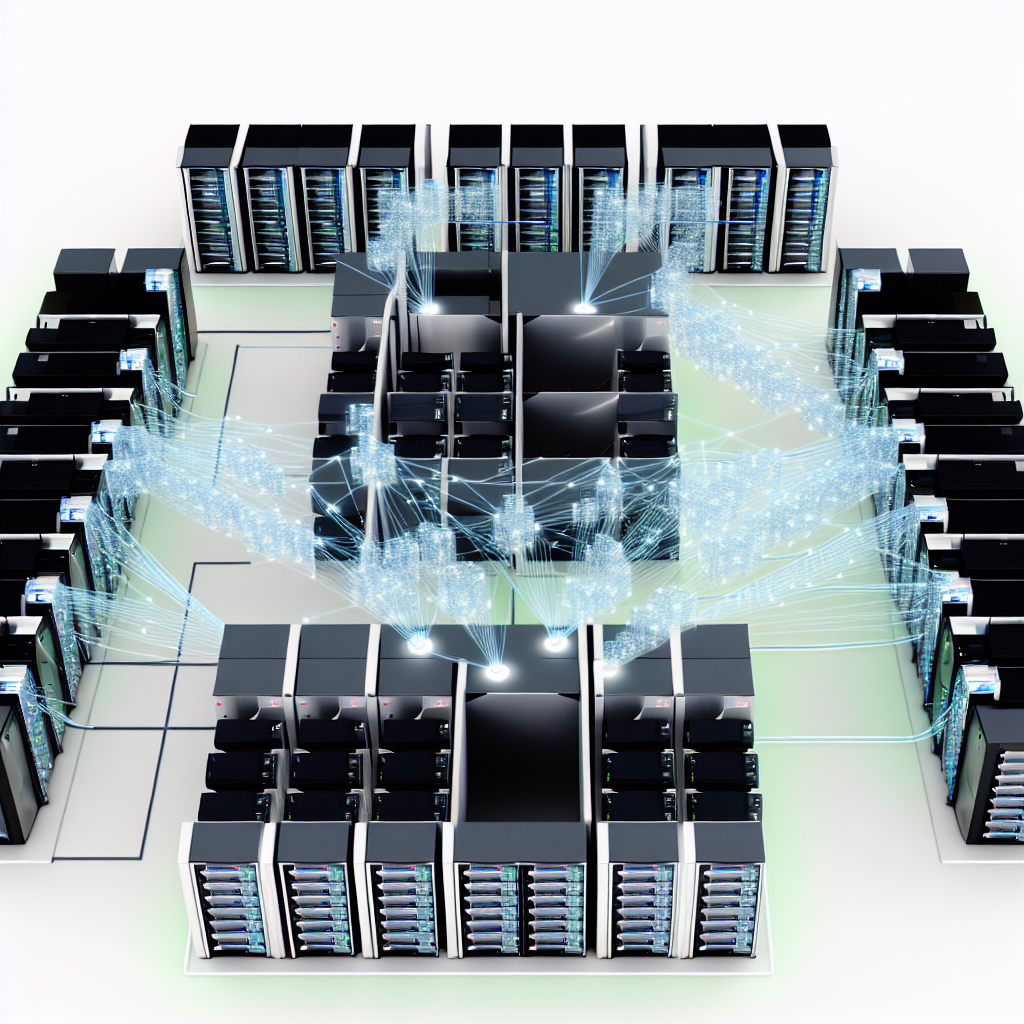

MapReduce is a programming model and an associated implementation for processing and generating large datasets. Developed by Google, it offers a way to perform distributed computing on large data sets using a parallel, distributed algorithm on a cluster. This article delves into the intricacies of MapReduce, its components, and its significance in the realm of big data processing.

The Concept of MapReduce

The core idea behind MapReduce is to split the task into smaller sub-tasks, process them in parallel, and then combine the results. The model operates in two distinct phases: the map phase and the reduce phase. In the map phase, the input data is divided into manageable chunks and processed independently. The reduce phase then takes the output from the map phase and combines it to form the final result. This approach allows for efficient processing of vast amounts of data across distributed systems.

Map Phase

During the map phase, the input data is divided into smaller segments, and a map function is applied to each segment. This function transforms the input data into key-value pairs. For instance, in a word count application, the map function would take a document and output a list of words (keys) with their respective counts (values). This phase is highly parallelizable, as each segment can be processed independently.

Shuffle and Sort

After the map phase, the intermediate key-value pairs are shuffled and sorted. This step ensures that all values associated with the same key are grouped together. The shuffle and sort phase is crucial for the reduce phase, as it organizes the data in a way that the reduce function can process it efficiently. This phase can be resource-intensive, as it involves significant data movement and sorting operations.

Reduce Phase

In the reduce phase, a reduce function is applied to the sorted key-value pairs. The function takes each key and its associated values and performs a computation to produce a single output value. Continuing with the word count example, the reduce function would sum the counts for each word to produce the final count. This phase combines the results from the map phase to generate the final output of the MapReduce job.

Fault Tolerance

One of the strengths of the MapReduce framework is its built-in fault tolerance. If a node in the cluster fails during the execution of a MapReduce job, the framework can automatically reassign the task to another node. This ensures that the job can complete successfully even in the presence of hardware failures. The framework also maintains multiple copies of the data, further enhancing its reliability.

Applications of MapReduce

MapReduce has found applications in various domains, including data mining, machine learning, and large-scale data processing. Its ability to handle massive datasets makes it an ideal choice for tasks such as indexing the web, analyzing log files, and processing scientific data. Companies like Google, Yahoo, and Facebook have leveraged MapReduce to process petabytes of data efficiently.

Challenges and Limitations

Despite its advantages, MapReduce is not without its challenges. The framework can be complex to set up and manage, requiring expertise in distributed systems. Additionally, certain types of computations may not fit well into the MapReduce model, leading to inefficiencies. The shuffle and sort phase can also become a bottleneck, especially with very large datasets. However, advancements in technology and the development of new tools continue to address these limitations.

Conclusion

MapReduce has revolutionized the way we process large datasets, providing a scalable and efficient solution for distributed computing. Its ability to handle massive amounts of data in parallel has made it a cornerstone of big data processing. While it has its challenges, the ongoing evolution of the framework and the development of complementary tools ensure that MapReduce remains a vital component of modern data processing infrastructures.