K-means Clustering

Table of Contents

Introduction to K-means Clustering

K-means clustering is a popular and widely used unsupervised machine learning algorithm that is primarily used for partitioning a dataset into a set of distinct, non-overlapping groups or clusters. Each cluster is defined by its centroid, which is a point that represents the average position of all the points within the cluster. The main objective of K-means clustering is to minimize the variance within each cluster while maximizing the variance between different clusters. This method is particularly useful in various fields such as market segmentation, image compression, and pattern recognition.

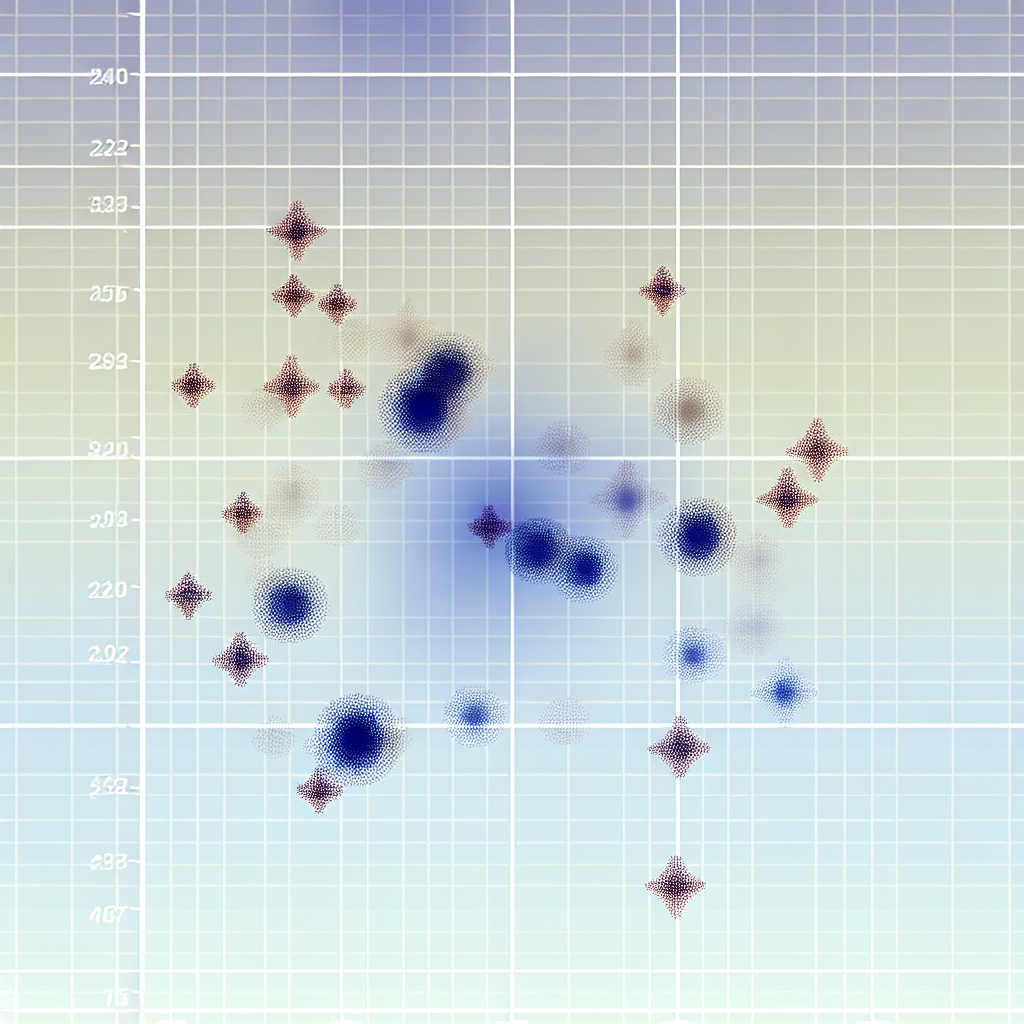

How K-means Clustering Works

The K-means clustering algorithm operates through an iterative process that involves several key steps. Initially, the number of clusters (K) is chosen, and K random points from the dataset are selected as the initial centroids. Each data point is then assigned to the nearest centroid, forming K clusters. The centroids are recalculated as the mean of all the points in each cluster. The assignment and update steps are repeated until the centroids no longer change significantly or a predefined number of iterations is reached. The final result is a set of K clusters with minimized intra-cluster variance.

Choosing the Number of Clusters

Selecting the appropriate number of clusters (K) is a crucial step in the K-means clustering process. A common method for determining the optimal number of clusters is the ’elbow method,’ which involves plotting the sum of squared distances (SSD) between data points and their respective centroids for different values of K. The ’elbow’ point, where the rate of decrease in SSD slows down, indicates a suitable number of clusters. Another approach is the ‘silhouette method,’ which measures the quality of clustering by calculating the average silhouette width for different values of K. A higher silhouette width indicates better-defined clusters.

Applications of K-means Clustering

K-means clustering has a wide range of applications across various domains. In marketing, it is used for customer segmentation, allowing companies to target specific groups with tailored marketing strategies. In image processing, K-means clustering helps in image compression by reducing the number of colors in an image while preserving its quality. In biology, it is utilized for gene expression analysis and identifying similar gene patterns. Additionally, K-means clustering is employed in anomaly detection, document clustering, and recommendation systems, among other fields.

Advantages of K-means Clustering

One of the main advantages of K-means clustering is its simplicity and ease of implementation. The algorithm is computationally efficient, making it suitable for handling large datasets. K-means clustering is also highly scalable, as it can be easily parallelized to speed up the computation process. Furthermore, the algorithm produces relatively stable and interpretable clusters, which are useful for gaining insights into the underlying structure of the data. Its flexibility allows it to be adapted to various types of data and applications.

Limitations of K-means Clustering

Despite its advantages, K-means clustering has several limitations. One major drawback is its sensitivity to the initial selection of centroids, which can lead to different clustering results. The algorithm also assumes that clusters are spherical and have equal variance, which may not always be the case in real-world data. Additionally, K-means clustering is prone to being affected by outliers, which can distort the cluster centroids and lead to suboptimal results. The requirement to specify the number of clusters (K) in advance can be challenging, especially when the true number of clusters is unknown.

Conclusion

K-means clustering is a powerful and versatile tool in the field of data analysis and machine learning. Its simplicity, efficiency, and wide range of applications make it a popular choice for clustering tasks. However, it is essential to be aware of its limitations and carefully consider the choice of the number of clusters and the impact of outliers. By understanding the strengths and weaknesses of K-means clustering, practitioners can effectively leverage this algorithm to uncover valuable insights from their data and make informed decisions.