Understanding Decision Trees

Table of Contents

Introduction

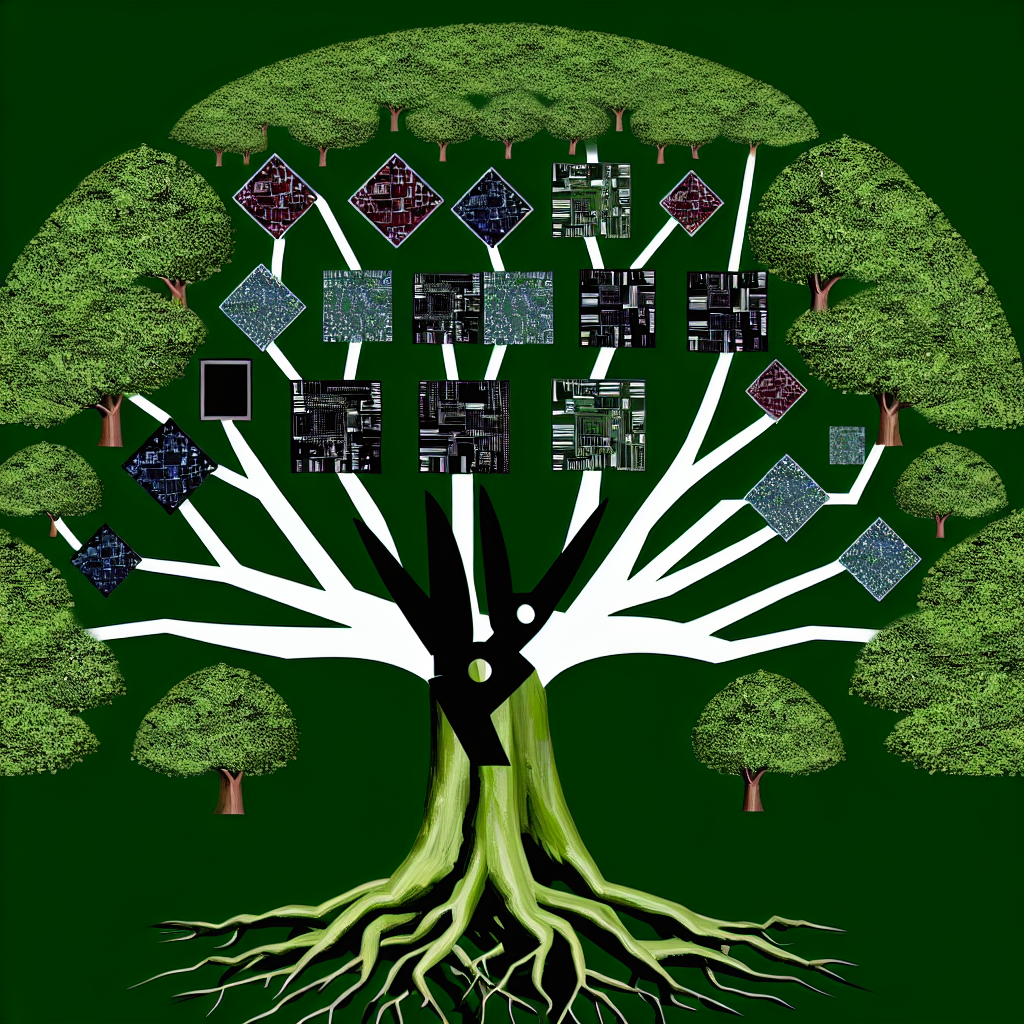

Decision trees are a popular and powerful tool used in machine learning and data mining. They are a type of supervised learning algorithm that is used for both classification and regression tasks. Decision trees are easy to understand and interpret, making them a valuable tool for both beginners and experts in the field of data science.

How Decision Trees Work

A decision tree is a flowchart-like structure where each internal node represents a test on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label. The goal of a decision tree is to create a model that predicts the value of a target variable by learning simple decision rules inferred from the data features.

Splitting Criteria

Decision trees use various splitting criteria to determine how to split the data at each node. The most commonly used splitting criteria include Gini impurity, entropy, and information gain. These criteria help the decision tree algorithm determine the best way to split the data to maximize the homogeneity of the resulting subgroups.

Advantages of Decision Trees

There are several advantages to using decision trees for machine learning tasks. One of the main advantages is that decision trees can handle both numerical and categorical data. They are also easy to interpret and visualize, making them a great tool for explaining the reasons behind a particular prediction.

Disadvantages of Decision Trees

While decision trees have many advantages, they also have some disadvantages. One of the main disadvantages is that decision trees can be prone to overfitting, especially when they are deep. Overfitting occurs when the model learns the training data too well and performs poorly on new, unseen data.

Pruning

To prevent overfitting, decision trees can be pruned. Pruning involves removing parts of the tree that do not provide any significant improvement in prediction accuracy. This helps simplify the model and reduce the risk of overfitting, resulting in a more generalizable and accurate model.

Applications of Decision Trees

Decision trees are widely used in various fields, including healthcare, finance, and marketing. In healthcare, decision trees can be used to predict the likelihood of a patient having a particular disease based on their symptoms. In finance, decision trees can be used to predict stock prices or credit risk. In marketing, decision trees can be used to identify customer segments and tailor marketing strategies accordingly.